By

Markus Lukasson

Share this post

In the race for AI adoption, "cool" often distracts from "profitable." Don't waste time on flashy experiments, your goal should be speed to value. This guide breaks down how to launch the fastest, simplest AI project possible. One that guarantees an immediate ROI.

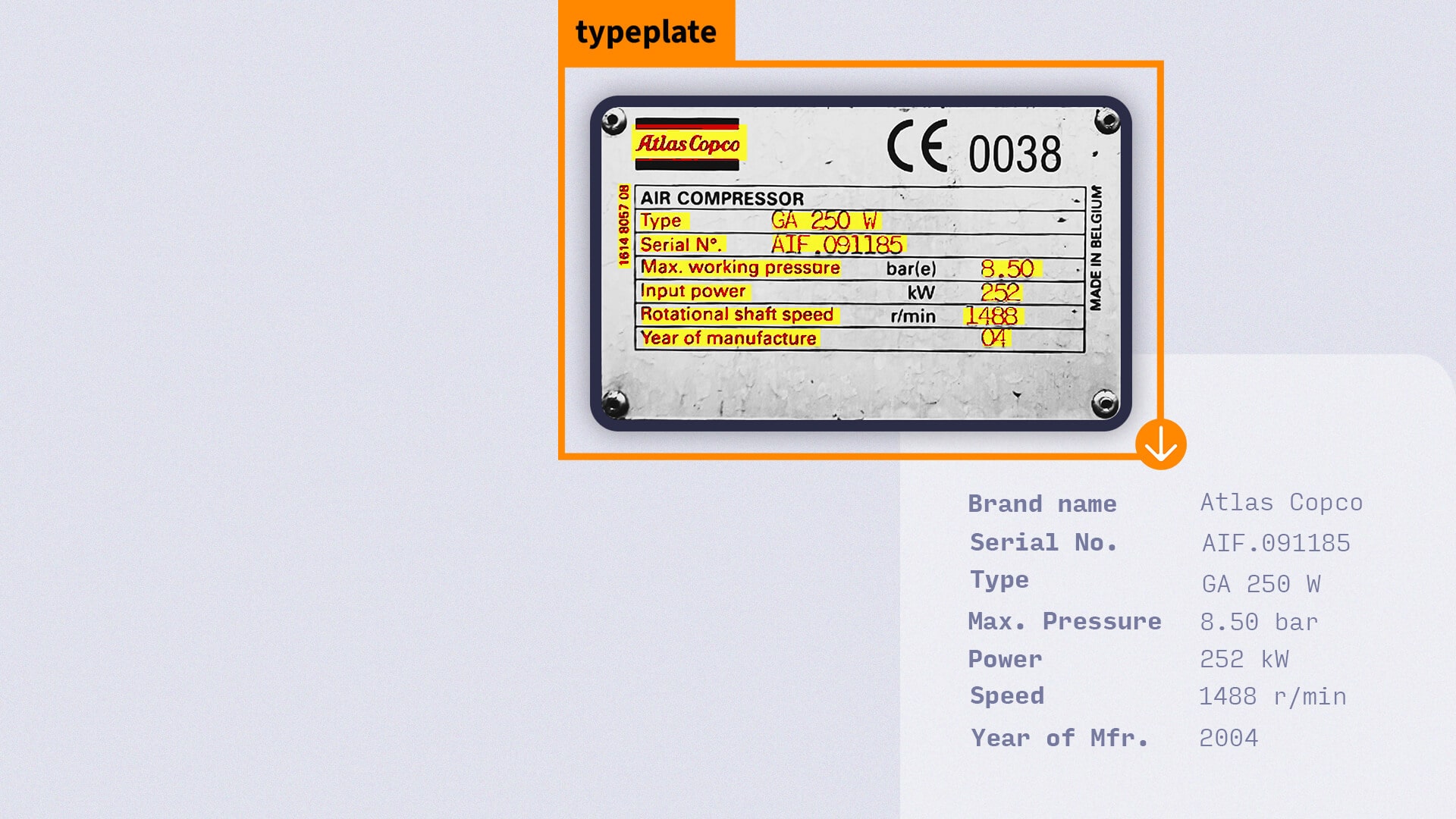

In manufacturing, service or warehouse operations, teams often waste time manually typing data from equipment typeplates, serial tags or labels. But manual data entry is inherently slow and error-prone. Users typically hate this task and will often avoid it when possible. If made mandatory, users may enter incorrect or placeholder data just to bypass the requirement, severely corrupting data quality.

But there is a quick win: Pair Optical Character Recognition (OCR) with an LLM and you will get an instant efficiency gain for any monotonous task requiring data entry. Just a picture is all you need. Your users will love it, your data will be clean, and most importantly, it will finally be captured at scale because of the low effort required.

The flow is simple: Use an Optical Character Recognition Engine to grab the text from a photo, and then use a Large Language Model to understand that text and turn it into structured data. This pairing is lightweight but very powerful. OCR grounds the process in real data (the exact text on the type plate or label), and the LLM adds intelligence to interpret that data reliably. Unlike previous solutions which relied on pre-build templates, the LLM is smart enough to handle different layouts on the fly. You get clean, usable data without a long development cycle. In fact, this approach addresses all the usual headaches:

It’s as simple as it sounds. One photo is all it takes. A technician uses a smartphone or tablet to snap a picture of a typeplate or label, that’s it. No Templates needed to capture the “right” text, no lengthy setup needed.

Behind the scenes, the OCR engine instantly reads all the text it can find in the image. Next, the LLM comes into play: it looks at that raw text and figures out the field types and relationships. In other words, it knows that “CAT 3516” is a model number, “2020” is the year, and “Serial No. 123456” is a serial identifier, for example. The AI then formats the output accordingly to a free or pre-defined response. In a blink, the data from the image is captured and structured without any human tweaking or typos. A single image of a type plate goes through this pipeline and emerges as useful data ready to go. And if required can be extended by the Geo-Location where the capture took place. This works right out of the box on virtually any input, whether it’s a printed metal plate, a tire’s sidewall markings, a VIN code on a vehicle, or any other printed/engraved text label. The combo of OCR+LLM handles it end-to-end: photo in, structured data out.

Here are a few scenarios showing the power of this combination: by specifying the required output attributes upfront (e.g., serial_number, type, year, vin), you can ensure that the structured output is always predictable and ready for integration into your existing systems, regardless of the input.

This approach isn’t just an incremental improvement, it’s a fundamental change from old-school data capture methods. No templates, no custom coding, no brittle rules. Traditional OCR solutions often require you to define a template or zone for each new document type (e.g. “Field X is in the top right corner of form Y”). If anything moved or a new format appeared, the system would break.

In our case, the LLM-based parser is layout-agnostic, it doesn’t matter if the serial number is at the top, bottom, or split across two lines, the AI will still recognize it by context. You’re no longer bound to rigid, pre-built field sets. The system adapts to plate layouts it’s never seen before. This makes it far more resilient to variation.

Another big differentiator is how well it handles real-world conditions. We know field data capture is never in a clean lab setting. Labels get dirty, plates corrode, stamps are hard to read. The new era of OCR engines is designed for the rough stuff. It can accurately pull text from virtually any surface or condition: rusty or scratched metal plates, engraved serial numbers on metal, faded or worn-out stickers, even handwritten entries or low-contrast engravings. Curved or angled surface? Bad lighting? No problem, advanced preprocessing and the AI’s context understanding mean it still succeeds where others would fail. In short, there are no special requirements, no need to perfectly line up a shot or use a specific template. If your eyes can see it, this AI can capture it. And yes, sometimes the OCR Engine makes mistakes, a 0 is mistaken for an O, a rn for an m. However, in our experience, these mistakes occur less frequently than typos made by humans.

So what’s the end product of all this? You get clean, structured data ready for use. Every time you scan a plate or tag, the system returns the critical fields in a tidy package. Think of things like serial numbers, model or part numbers, manufacturer names, dates, voltages, capacities, whatever text was on that label, now turned into labeled data points. The output is typically formatted in JSON (or a similarly structured format), which makes it immediately practical. In other words, the AI isn’t just spitting out a text blob; it’s giving you a structured record. For example, a single photo of a machine’s nameplate could return a JSON object with keys for

Because it’s already structured, you can feed it straight into your systems. Integration is a non-issue, you can dump this data into your CRM, ERP, maintenance database, or any other software with ease. There’s no need for someone to reformat or manually input it. In essence, you’ve turned a photo into immediately actionable data. This means faster updates, automated record-keeping, and the ability to trigger processes (like ordering a replacement part or scheduling service) without human transcription. It’s all delivered in a standard, computer-friendly format that fits into your existing workflows.

One of the best aspects of this OCR+LLM solution is how quickly you can try it and see results. This isn’t a six-month IT project, you can run a pilot in a matter of days (or even hours). Here’s how straightforward it is: gather a handful of sample plates or labels (just take pictures with a phone), and run them through our demo system we can setup for you. Immediately, you’ll get the extracted data. Now, measure it. How accurate was it compared to ground truth? How long did it take versus a human doing the same task? You can literally have one of your service technicians do a side-by-side: in one shift, let them manually type in a few items and also use the OCR+LLM tool for the same items. Compare the time spent and the error rates. The difference will be obvious, tasks that used to take minutes per item now take seconds, with fewer mistakes. Even saving just 1-2 minutes per identification task (which is very conservative) adds up to significant ROI across hundreds or thousands of manual typing tasks. The beauty is you get hard numbers almost instantly: X minutes saved per task, Y errors avoided. You don’t have to speculate about value; you can demonstrate it in a single afternoon.

This is arguably the easiest, lowest-effort AI project you can deploy, yet it delivers real-world impact you can measure. No complex integration, no big training period, just take some photos and watch it work. When the pilot results come in, they practically make the case for scaling it up: the time savings and accuracy improvements translate directly to cost savings and better service. It’s quick to implement, quick to show value.

To make it concrete, here are a few examples of what this OCR+LLM approach can tackle. Things that used to be nightmares (or outright impossible) to automate:

These are just a few scenarios, but they highlight a pattern: Repetitive, monotonous tasks that were once unsolvable or impractical to automate are now easily within reach. By combining OCR with an LLM, we’ve removed the old barriers of rigid formats and poor image conditions. The solution adapts to your reality, instead of forcing you to adapt your process to the solution. And it does all this with minimal effort to implement. For aftersales and service leaders, this means you finally have a practical AI tool that delivers quick ROI. It’s not a science project or a future promise, it’s a plug-and-play upgrade to your workflow that starts paying back immediately. In short, it’s the easiest high-impact AI win you can deploy today.

Results don’t come from theory; they come from advice applied to your context. Reach out to us if you would like to explore where OCR+LLM can speed up your processes.

Get answers to your specific question, and find out why nyris is the right choice for your business.